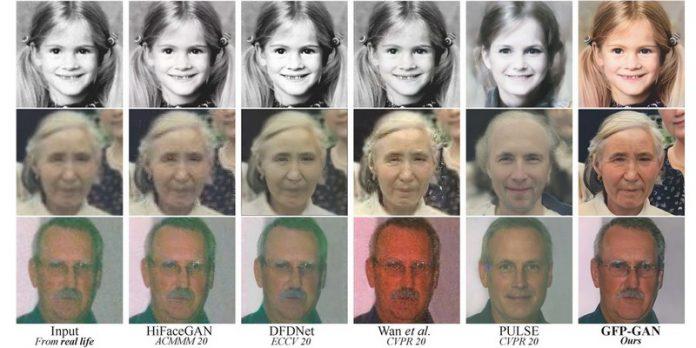

GFP-GAN, an image restoration model exploiting GANs with promising results

As part of a project led by Tencent, several researchers have succeeded in developing a model for restoring images and photographs of faces. It is far from being the first tool of its kind, but the results it offers are much better than the average, as evidenced by the various comparisons (see illustration). The tool exploits generative adversarial networks coupled with a degradation suppression module.

The challenge of restoring face images and photos using artificial intelligence

Image restoration should not be confused with the sole action of digital retouching. The two processes are quite similar, but the techniques used may be different. If the first seems rather objective, the other is subjective. Digital retouching is the process of making an image look better, while restoration aims to reverse known degradation operations applied to images so that they can no longer be rendered. see on the pictures.

Normally, facial restoration is based on several factors such as facial geometry. However, the low quality of the input images which is often required does not help to apply techniques related to this geometry, which limits restoration applications. To circumvent this problem, a research team is proposing GFP-GAN, which uses other characteristics and other techniques to succeed in restoring a photograph on which a face appears as well as possible thanks to artificial intelligence.

Their model was the subject of a publication authored by Xintao Wang, Yu Li, Honglun Zhang Ying Shan, all working for Tencent's Applied Research Center.

What is the GFP-GAN model made of? How does it work ?

To design the GFP-GAN model, the researchers aimed to achieve a good balance between reality and fidelity of the initially degraded image. As the name suggests, it consists of a Generative Adversarial Network (GAN) which has been combined with Generative Facial Prior (GFP), a tool specifically designed for image restoration.

Traditional restoration models exploited the inversion method: they first invert the degraded image so that it can be in a state that the pretrained GAN can recognize and then run image-specific optimization techniques for the restore. GFP-GAN, on the other hand, uses a degradation removal module (U-Net) and pretrained face GAN to capture facial features. These are interconnected by code mapping and using Channel-Split Spatial Feature Transform (CS-SFT) layers.

GFP-GAN is pretrained on the FFHQ dataset, which has approximately 70,000 high quality images. All images were resized to 512×512 pixels during training. Thus the model could be trained on synthetic data that approximates low-quality real images and generalizes to real-world images during inference output synthesis. As we can by comparing the output image against the input image, several types of image degradation have been removed.

According to the researchers, the GFP-GAN model with the CS-SFT layers achieves a good balance between image fidelity and reality in a single pass. The code is freely available on GitHub.

![PAU - [ Altern@tives-P@loises ] PAU - [ Altern@tives-P@loises ]](http://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/179/2022-3-2/21584.jpeg)

![Good deal: 15% bonus credit on App Store cards of €25 and more [completed] 🆕 | iGeneration Good deal: 15% bonus credit on App Store cards of €25 and more [completed] 🆕 | iGeneration](http://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/179/2022-3-2/21870.jpeg)

Related Articles